[ad_1]

A analysis staff from the College of Malaga (Andalusia, Spain) has analyzed how the mind responds to listening to completely different musical genres and has labeled {the electrical} indicators that happen in it by way of synthetic intelligence, differentiating based on whether or not what’s heard is melody or voice and whether or not or not what’s perceived by the ear is preferred or disliked. The information obtained allow the event of purposes to generate musical playlists relying on the tastes or particular person wants of every individual.

Realizing how the mind works in response to completely different stimuli and which particular areas are activated in sure circumstances permits for the creation of instruments to facilitate each day life. These researchers thereby supply an advance within the classification of the varied mind responses to completely different musical genres and to sounds of differing natures (voice and music) within the article titled “Power-based options and bi-LSTM neural network for EEG-based music and voice classification,” published in Neural Computing and Purposes.

The consultants have concluded that the mind responds in a different way to varied stimuli and have recognized these variations based on the power ranges of {the electrical} indicators recorded in numerous mind areas. They’ve noticed that these indicators change relying on the musical style perceived by the ear and whether or not or not the tune is preferred or disliked.

This might open the door to app growth for creating higher music suggestions than present playlists. “If we all know how the mind reacts relying on the musical model being listened to and the tastes of the listener, we are able to fine-tune the choice proposed to the consumer,” Fundación Descubre was instructed by Lorenzo J. Tardón, researcher on the College of Malaga and one of many authors of the article.

To do that, they’ve outlined a scheme for characterizing brain activity based mostly on the relationships between electrical indicators, detected in numerous areas by way of electroencephalography, and their classification utilizing two kinds of assessments: binary and multi-class.

“The primary one makes use of duties with easy algorithms, the place we differentiate between spoken voice and music. The second, extra complicated, analyzes mind responses when listening to songs of various musical genres: ballad, classical, metallic and reggaeton. As well as, it takes under consideration the musical style of every particular person,” provides the researcher.

Synthetic intelligence to review the mind

Neural networks are artificial intelligence instruments for processing knowledge in a way much like the way in which the human mind works. The outcomes of this work have been obtained utilizing the neural community generally known as bidirectional LSTM, which learns from relationships between knowledge that may be interpreted as long-term and short-term reminiscence. It’s a kind of structure utilized in deep learning that treats data in two completely different instructions, from the start to the top and vice versa. On this manner, the mannequin acknowledges the previous and way forward for the references included and creates contexts for classification.

Particularly, the neural community used to carry out these classification duties consists of 61 inputs that obtain the info sequences together with the power relationships between the completely different areas of the mind. The data is processed within the successive layers of the community to generate solutions to the questions requested about the kind of sound content material heard or the tastes of the individual listening to a bit of music.

Musical experiments

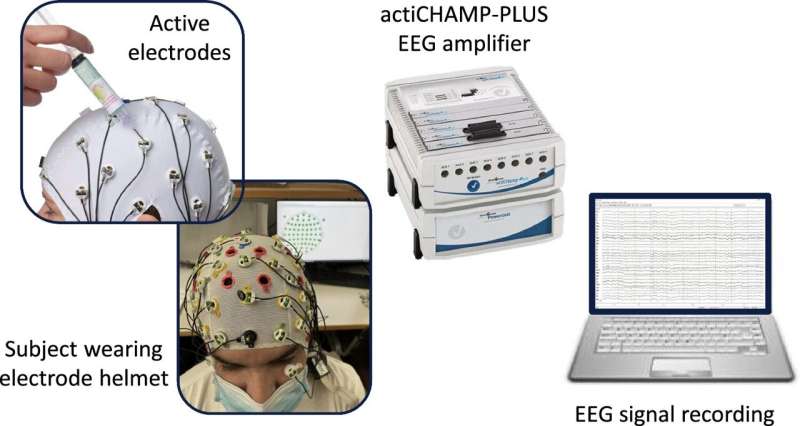

Through the trials, the volunteers wore caps with electrodes that picked up electrical signals from the mind. On the similar time, they have been linked to audio system to listen to the music. Synchronization marks have been then established to examine what was taking place at every second within the mind throughout the half hour of the experiment.

Within the first take a look at, members randomly listened to twenty excerpts, every lasting 30 seconds, of the refrain or the catchy a part of songs from completely different musical genres. After every listening take a look at they have been requested in the event that they preferred the tune, with three attainable solutions: “I prefer it,” “I prefer it somewhat” or “I do not just like the tune.” They have been additionally requested in the event that they already knew the tune they heard, with the potential of answering “I do know the tune,” “It sounds acquainted” or “I do not know the song.”

Through the second take a look at, topics heard 30 randomly chosen sentences in numerous languages: Spanish, English, German, Italian and Korean. This second take a look at was supposed to establish mind activation upon listening to a voice no matter whether or not or not the language being spoken is thought.

Presently, the researchers are persevering with their research to judge different kinds of sounds and duties and the discount of the variety of EEG channels, which can make it attainable to increase the usefulness of the mannequin for different purposes and environments.

Extra data:

Isaac Ariza et al, Power-based options and bi-LSTM neural community for EEG-based music and voice classification, Neural Computing and Purposes (2023). DOI: 10.1007/s00521-023-09061-3

Offered by

Fundación Descubre

Quotation:

Measuring the mind’s response to completely different musical genres with synthetic intelligence (2024, February 26)

retrieved 26 February 2024

from https://medicalxpress.com/information/2024-02-brain-response-musical-genres-artificial.html

This doc is topic to copyright. Aside from any honest dealing for the aim of personal research or analysis, no

half could also be reproduced with out the written permission. The content material is supplied for data functions solely.

[ad_2]

Source link

Discussion about this post